Well done! This really helps to de-mystify the operations of the cache. I’ll update the Sacred Tech Scroll to clarify the replacement policy.

How far in advance are the cache entries being fetched relative to the actual code being executed?

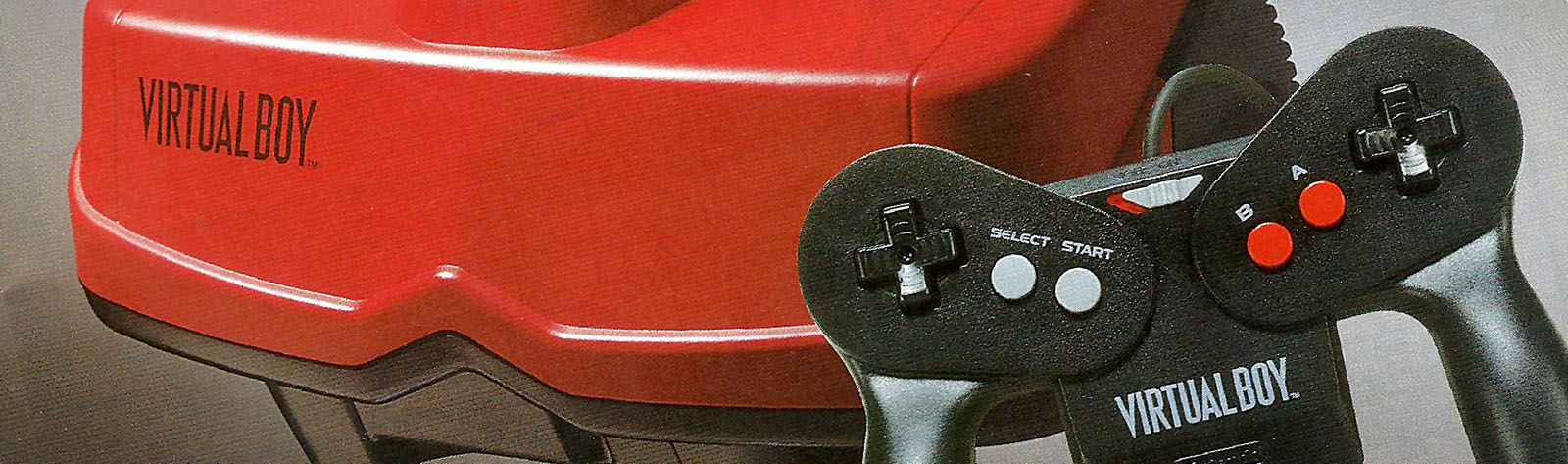

The stand orientation in these images is incorrect. I have prepared a post detailing why:

https://www.virtual-boy.com/forums/t/stand-orientation/

To the future researcher looking for answers: I revisited the audio thing for an unrelated project and took the time to work through it and find a passable solution. Java is not set up for this.

Audio output in Java is most easily accomplished via [font=Courier New]AudioSystem.getSourceDataLine()[/font], which in turn directly provides a line object and methods for sending output to the speakers. For most applications, this is how you’ll want to manage audio.

SourceDataLine has some methods for tracking playback status, namely [font=Courier New]getLongFramePosition()[/font] and [font=Courier New]available()[/font]. Although the frame position is useful for determining approximately how much data has been consumed, it does not reliably reflect how many resources are currently available to the line. And while [font=Courier New]available()[/font] does give the number of bytes that can be written without blocking, it is likewise not reliable due to an unintuitive behavior of the implementation. If there are n bytes available and you write some number of bytes m, it would be reasonable to expect that the next call to [font=Courier New]available()[/font] would give either n – m or a slightly larger number depending on playback, but that’s not always the case for whatever reason.

Ultimately, there is no way to monitor the state of resources within a SourceDataLine during playback. The emulator depends on the opposite being the case, and consequently falters pretty spectacularly.

Further experiments and profiling of Java’s audio behaviors have turned up a best-case scenario, which is still not completely satisfactory, but if it’s the best-case, it won’t get any better, right? The solution is two-fold:

1) In order to minimize the opportunities for stuttering during playback, data should be fed to the SourceDataLine object in its own thread, where it can block on a call to [font=Courier New]write()[/font] and likewise block when retrieving sample buffers via something like a BlockingQueue. This ensures data will always be made available to the SourceDataLine with minimal latency.

2) The amount of data written at once to a SourceDataLine should be as large as possible. SourceDataLine appears to introduce some overhead and begins to stutter if the data buffers are too small, and the garbage collector can potentially interrupt the software while it’s attempting to have samples ready in time. Bear in mind that the thread scheduler may give the thread something like 15ms at a time to execute. By minimizing the number of calls to [font=Courier New]write()[/font], overhead is kept low and garbage collection has less of an opportunity to interrupt playback.

The concern now lies in the fact that the actual delay between an action occurring on-screen and the audio for that action coming from the speakers should ideally be as short as possible, and that contrasts with Java’s approach to audio. I was able to set up a mechanism that initializes audio output with a configurable initial delay built of empty samples, and writes to the output line in buffers with a configurable length. On my personal rig the optimal setup is something around a 0.15s initial delay and a 0.1s block size, although once in a great while a very short, single stutter does occur. Eventually the JVM profiler seems to get the hang of it, so any window of interruptions in playback should be short-lived.

According to my time log, I spent 30.847 hours working on this problem. It was not a simple problem!

Thank the stars, that’s music to my ears. tl;dw: Nintendo mentioned during an investors meeting that they have nothing coming down the pipe for 3DS in 2019. It’s about frickin’ time.

blitter wrote:

Disassembling and making sense out of optimized, unsymbolicated assembly is not for the faint of heart and definitely not a task for a beginner.

While it’s true that someone who is just getting into programming may not be well-equipped for the task, the process in general is not as hard as you might think. With the right resources, I’m faithful that SpectreVR (the user) can pull off this Spectre VR (the game) project.

blitter wrote:

If your goal is to make a version of Spectre on the Virtual Boy, very little of the Mac code will help you anyway. Here are a few major points about the Mac version that will not transfer over:

Only a cross-section of the game’s code is necessary for a faithful port: the fundamental game engine and general rendering logic. The nitty-gritties about how the game communicates I/O with the OS or interacts with the windowing system aren’t all that important when porting the game to any target, let alone Virtual Boy. As long as one knows what the game wants to do, the rest can be handled by a purpose-built implementation.

Also, between those Inside Macintosh books and Macintosh Programmer’s Workshop–both of which are available online–we still have access to information regarding the old system API.

I’ve got a little somethin’ in the works…

Attachments:

Whoooo boy, that was… that was an experience.

I spent literally every waking hour the last two and a half days working on setting up the native module stuff, and it has been very successful. However, as you can see by the lack of anything to download attached to this post, it hasn’t been “ready for prime time” successful. Very, very nearly, but not quite.

When I finally got everything put together and flipped the audio on… the exact same stuttering and slow-down occurred that was present in the Java implementation. This of course made me suspicious, as having identical performance in the native module is preposterous. But my earlier troubleshooting involved disabling audio rendering in the Java code in exchange for some supplied zeroes, and the speed went back up. I reasoned the audio processing itself was to blame…

After the curiosity with the native audio, I switched back over to the Java implementation and merely disabled sending the rendered audio to the speakers. That also ran full-speed. In fact, it stayed full-speed while logging audio to a file, despite being the Java code. So it’s not the processing overhead at all! My best guess is that the earlier test “worked” because the initial contents of the buffer was zeroes and I was writing zeroes into it. Java may have found a way to make that work.

As far as what this means for getting good, full-speed audio output in Java, that one’s got me a bit worried. VSU Workshop manages to run all six channels full-speed in the Java code, and the native implementation has more than enough muscle to get the job done, so exactly what Java is doing or how to make it do what I want is a mystery.

Truth be told, Java Sound isn’t designed to be used as a timing source like the emulator requires. It doesn’t actually provide any means to determine how much audio has finished playing or any mechanism for signalling the application when a buffer has finished. What I’ve been doing so far is a not-exactly-documented use of SourceDataLine.available(), and it’s obviously causing problems. That was my best effort, though, and I’m inclined to think that perhaps Java Sound isn’t going to be a good option.

__________

In other news, porting the Java code over to C was pretty much as smooth as I was expecting it to be, with two exceptions: the VIP rendering code and pretty much everything to do with breakpoints.

The VIP code is a couple of monstrous functions with many variables that were designed to produce the images as efficiently as possible, which they do rather well for the most part. Porting those to C proved to be a bit troublesome, as I did a lot of sign extensions with Java’s shift operators (behavior that is guaranteed in Java but not necessarily in C) and a few other Java-centric design choices. After converting to C with the associated adjustments, there are some bugs in the rendering code that I’m not happy with. I’ll either have to try again or write new rendering code to make sure it works in both languages.

The breakpoints library is another matter altogether. Unlike the emulation core, I didn’t take care to design breakpoints in a way that would easily adapt to C. The native version of breakpoints that I came up with has some drastic differences and what code did make it over is ugly as heck after the needed modifications, and I’m overall disappointed with the whole thing. They function well enough to get games running (at full speed, naturally), but beyond that I want to toss them in the garbage.

There’s a mounting pile of other considerations that would require extensive refactoring to incorporate new things I’ve learned along the way. At this point the prospect of a total project do-over seems very appealing, but I haven’t decided what I want to do going forward.

-

This reply was modified 5 years ago by

Guy Perfect.

Guy Perfect.

I spent the last week working on my Wario Land project, which has had a fantastic start, but this isn’t the thread for that. The reason I’m posting right now is because tonight, out of curiosity, I decided to do a little research into the JNI to see what I would need to do in order to get the native emulation core set up. I figured it would be some involved process with headers and libraries every which way, but that’s not the case. In fact, working with the JNI is easy. Really easy.

It’s so easy, in fact, that I’ve decided to turn my immediate attention toward getting that native implementation set up posthaste. There’s a little bit of added work in getting the code moved over from Java, but the vast majority is done as the Java implementation in and of itself, so it shouldn’t take more than a handful of days. I’ll keep you posted.

trailboss wrote:

Ha, I know how this story ends just from experience. Jokes aside, a place to formally submit bugs would be nice

Hasn’t failed me yet. Just look at this great emulator we have already!

Bug reports go in this thread. Don’t worry, I’ll see them! You have bugs to report, don’t you?

Raverrevolution wrote:

How do I load this up in my 3DS?

You don’t, just yet. The most recent development finished up the hardware functions in a Java implementation, which is designed to run on a workstation. The next phase (after filling out those greyed-out menus) is to port the implementation to C so it can be built as a native library, then use that on 3DS.

I’d have been working on 3DS before now, but Nintendo for some unknown reason refuses to let the silly thing die. I don’t want to risk getting a homebrew solution set up just to have a firmware update blow it out of the water. And as of the time of this post, Nintendo released a game for 3DS (Kirby’s Extra Epic Yarn) a mere 24 days ago, meaning they’re not ready to take their eyes off of it just yet.

Let’s hope Kirby is the end of 3DS. I’m really itching to sink my teeth in.

VmprHntrD wrote:

It’s a nice try at an emulator definitely, guess it’s just java being java perhaps holding things back some due to how it works.

Fortunately, I was thinking ahead when I wrote the Java implementation of the emulator core. I did it in such a way that converting it to C would require minimal modification, so for the most part it’ll just be a copy/paste job and changing some data types… and turning all of the comments into [font=Courier New]/* C style */[/font]… I even plan to make functions with names like [font=Courier New]Integer_compareUnsigned()[/font] and [font=Courier New]Float_intBitsToFloat()[/font] just to keep the two code bases that much more parallel.

The JNI module that will expose the C implementation to the emulator application only needs to implement the methods declared in the VUE interface, which itself isn’t a huge deal. It’ll be a lot of busy work getting the ~236 KiB of Java source code moved over to C, but it’s nothing that requires anything resembling expertise.

Plus, it’ll give me a chance to review every line of code just in case I think of something along the way that could improve it.

trailboss wrote:

Do you plan on getting this up on Github? I think that would be a great place to host builds/report issues/make pull requests.

My opinion of Github aside, I haven’t been bothering with version control so far since I’m the only one working on the project. And even if one or two other people do get involved (which doesn’t seem likely), I’ll still probably not elect to set up a repository for it. And the source files are all in those .jar files, so it’s not a matter of making them available.

In short, using version control at this time would just be giving myself more work without adding anything of value. (-:

VmprHntrD wrote:

I’d hate to think what machine you’d need to get this to run right with the audio on.

The JVM is able to get short burts out–longer than the 1/50 second rendering interval–so I know it’s not a limitation of CPU power at the lowest level. The native implementation written in C should shine a better light on this, since it doesn’t have any of the sandboxing overhead that the managed Java code has to deal with.

VmprHntrD wrote:

Also are some games implemented and others not on this?

The emulation core in its current state isn’t 100% compatible with the commercial library. Red Alarm in particular is mostly supported, but stopped booting after I adjusted the number of CPU cycles taken by a store operation. I don’t know what the game is doing that it would even be aware of how long a store takes, but getting the game up and running will require some investigation in the debugger. It’s a great example of why the CPU and breakpoint features are so important to have!

If anyone is technically inclined and would be able to help look into Red Alarm specifically, that would be greatly appreciated.

VmprHntrD wrote:

And thirdly the menu is grayed out I noticed for configuring the emulator settings for audio, visual, inputs.

Anything greyed out (except for the Link menu when only one window is active) isn’t implemented yet, so that’s not an error. If you want something specific to help with testing, let me know and I’ll make a point to get it set up for you.

Fire-WSP wrote:

And you entirely missing the point. There is and was interest in the Emulator but as I said from the beginning, the people today do not care so much about the next PC emulator or debugger. That is only us here who care about such stuff mostly.

Every part of the project is important, which is a much broader scope than running the core on 3DS. The documentation and Java implementation account for some 80% of the project goals, and they’ve taken a fair amount of time and effort to get to their current state. The CPU and breakpoint debuggers in particular have been instrumental for research and bug-hunting, so it’s not like any development time has been spent on frivolous things.

The remaining 20% is still on the way, but it’s abundantly clear that I’d be doing it mostly for myself. The invitation still stands that if someone needs something implemented I’d be happy to work on it, and the source code for every build released has been bundled inside of every .jar file.

Fire-WSP wrote:

[…] I have to say Bye Bye 3DS VB Emulator.

Reading the last post this will never happen anymore.

Shame.[…]

So okay, who is next in line for doing finally the right thing???

Overlooking the part where I said exactly the opposite, is this really the tone you want to convey?

With the entire emulation core implemented, with the experience of producing the emulator of my dreams, and with a sigh of relief, I’m finally freed up to do other things. I won’t be abandoning this project–far from it–but as of this latest update, it is no longer my primary spare-time focus. I’ll still work on it from time to time when other things don’t have my attention, but since interest in this project is very low, there are other things I want to be doing that I’ve been putting off.

The remaining window menus will be implemented in the coming weeks, but I won’t be sticking too strongly to my Wednesday update schedule like I have been for the past couple of months. If someone is keenly interested in something that can’t be clicked on, I’d be happy to prioritize it to make it available, but if it’s just a matter of “it’s not done yet”, then I’ll get to it when I get to it. (-:

As for what I will be doing, there’s 1) a long-neglected project with some other PVB members I’d like to get the ball rolling on, 2) something I want to do with Wario Land and 3) something related to NES that I’d like to keep a complete secret until the time comes.

For those who have stuck with me through all of this, thank you for your patience and thank you for your financial support. Planet Virtual Boy’s emulator is becoming something great and that means the world to me.

A new build is attached to this post:

New Features

• Audio is now implemented, disabled by default, toggled with Ctrl+A

• Added disassembler comments for VSU registers

Code Changes

• Rebuilt the Console class with new threading, timing and audio code

Bug Fixes

• Items in the Link menu will now retain the check mark when selected

• Triggered breakpoints will no longer steal focus from other main windows

• Corrected the address that presents BKCOL disassembler comments

• Disassembler comment detection for I/O ports now correctly masks address bits

The key attraction this time around is audio support, and the Sacred Tech Scroll has been updated as usual. There’s very little left for the document before the formal v1.0 release, and the emulator application just needs a few menu items implemented before it’s quote-unquote “ready” for its own version 1.0.

If you give the audio a go, you’ll probably notice pretty quick a bit of a glaring problem: it’s a bit too much for Java to handle unless you’ve got a hulking monster of a computer. On my own box, most games stutter pretty badly, spending more time between frames than presenting them, which is why audio is disabled by default. This isn’t a problem that’s going to go away without a native implementation driving the workload. The JVM profiler does its best to optimize the emulation core, but there’s just too much going on for it to keep up.

Audio does work correctly, though, and dare I say perfectly. Attached to this post is a .ogg file that is a log of audio output from the emulator during a short Wario Land session. If you open it up in an audio editor, you’ll see the actual samples produced by the emulator, including the simulated analog filter that centers the output around zero. All of the samples are unedited, exactly as they came out of the emulator. The only thing I did to the clip was trim off some silence at the beginning.

Also attached to this post is an IPS patch (use it with something like Lunar IPS) that will modify Wario Land slightly in order to use the [font=Courier New]HALT[/font] instruction while waiting for VIP activity. The disassembler and breakpoints came in real handy for this! Performance is improved relative to the unmodified game, but it’s still not quite fast enough on my box. That native implementation is going to be the key to getting performance back up to where it needs to be, but that’s still a ways out yet.

Lastly, a copy of VSU Workshop is attached to this post for testing purposes.

All of the internals regarding the frame animator were completely redone in this build, meaning a completely different set of potential bugs now applies. If you could play around with the window commands and linked window communication to check for bugs, it would be appreciated.

Attachments:

Turns out I’d forgotten to put the new “config” package into the .jar file, so the previous upload did not work. The one attached to this post should be fine.

Also, after typing out why I had to process linked simulations one cycle at a time, it occurred to me that I could check for communications while both simulations were in halt status even if the communication doesn’t raise an interrupt. This build takes that into account and sees vastly improved performance over the broken one that you never got a chance to use anyway because of the aforementioned .jar mistake…

Attachments:

Although the outward functionality appears to be relatively the same, reworking the application UI to accommodate linked pairs of emulation windows was a lot of intricate work. But it’s done now!

Attached to this post is a new build: (See following post)

New Features

• The main application window has been redesigned

• The communication port is now implemented

Code Changes

• Unmapped reads in the [font=Courier New]0x02000000-0x02FFFFFF[/font] range will now be zeroes

• The localization driver was updated to support substitutions

• A new class MainWindow was created for representing the application interface

• Multiple windows can now be instantiated and linked

• Global input commands are now implemented and are configurable

• The Config class and its new helper classes are in a new “config” package

Bug Fixes

• Writes to DPSTTS were forwarding to DPCTRL, which I don’t think is correct

• Simultaneous Left/Right and Up/Down are disabled by default, correcting a crash in Wario Land

__________

To use the new communication feature:

• Create an additional emulator window with File → New Window

• Associate communication peers through Emulation → Link

• Use the emulator windows as you normally would

When only one emulator window exists, the Link sub-menu will not be available. When multiple windows exist, the Link sub-window will display a list of all current windows, allowing the user to select one to associate as a communication peer. If the window is already linked, a Disconnect option is also available in the Link sub-menu.

Communication Workshop has been attached to this post to help with testing.

I did a lot of experimentation with boosting performance when linking simulations, but the unfortunate fact of the matter is that the only way to keep linked instances synchronized is to process them one cycle at a time. I thought maybe checking if both simulations were in halt status I could boost performance by skipping to the next interrupt, but communications don’t always raise interrupts, and there’s no way to predict ahead of time when the software is going to trigger a communication event…

… So long story short, linking in the current Java implementation is slow. Hopefully the eventual native implementation can handle linking at full speed.

Each emulation window animates frames in its own thread as a consequence of how timers work. However, linked frames will only use the animation thread from whichever window initiated simulation processing. I’m pretty sure I got all the logic correct, but since thread locks are rather intermingled under a variety of circumstances, it’s possible there might be some combination of circumstances that could cause the application to deadlock.

The following describes the general contract of the linking behavior:

• Unlinked windows operate in their own threads

• When paused windows are linked, the one that invokes the Run command controls both

• When running windows are linked, the one that invokes the Link command controls both

• When a running and paused window are linked, the paused window is activated and the one that invokes the Link command controls both

• When running linked windows are disconnected, the “slave” window resumes control of itself

• Closing a linked window invokes the Disconnect command

• The following commands will only apply to the linked window that invokes them: Reset, Frame Advance, Step Over, and any user-defined breakpoints

Keep an eye out for any reproducible problems that might occur with thread management, and please report any problems in a reply. I did my best to make sure it works correctly, but this is a bit of an atypical method of threading windows and I don’t want to say with absolute certainty that it can’t break.

__________

Besides the link feature, the main window was redesigned and all of the old features remain implemented. However, the only new feature that was implemented is the Game/Debug Mode setup, with additional menu options to be implemented later. One thing to keep in mind with regards to window modes:

• In Game Mode, emulation will always begin when a ROM is loaded

• In Debug Mode, emulation will always be paused when a ROM is loaded

This should be most useful to the most users and might wind up in the Settings window depending on what I decide on the matter.

Ctrl+3 still cycles anaglyph colors, but it is a temporary command that will be removed once the Video Settings interface is implemented, which will allow the user to configure whatever colors they wish.

The debugger interfaces still do not contain any safeguards while the simulation is running, and should not be used unless the simulation is paused. This is also temporary, as I didn’t want to delay this build any longer than necessary.

Step, Step Over and Frame Advance can now be used while the simulation is running. All three will cause emulation to pause after they complete.

-

This reply was modified 5 years, 1 month ago by

Guy Perfect.

Guy Perfect.

No build this week, but there’s plenty news about updates. Part of what’s taking so long is a management shuffle at work resulting in an unusually heavy workload, and part of it is… well, let’s find out!

For starters, the link implementation is all set up and works just fine. I was able to test it with a truly terrifying hack-n-slash second Console window:

The “Communication Workshop” program in the screenshot can be found attached to yonder post, but since I can probably count on one hand the number of people who can make effective use of it, there’s not much incentive for anyone to go grab it. But it does indeed work correctly, which is the important part.

As may be apparent, the main application window needs to be adjusted in order to properly present two linked simulations to the user. I decided to take the opportunity to redesign the main window entirely, fixing various usability problems along the way and introducing my vision of the final application UI.

__________

Many Hats

The next build introduces a new application paradigm: presentation is split into two modes depending on the user’s preferences. In Game Mode, the top-level window is only the menu bar and video display, and in Debug Mode, the MDI that has been used up until now will be used. Debug mode is toggled on and off in the File menu.

In Game Mode, the File menu has these options. Most should be pretty self-explanatory, though New Window will produce another top-level main window with all the same features. This is what will enable link play, as we’ll see in a bit.

In Debug Mode, the File menu becomes slightly different as it adds options for ROM file management as well as individual control over SRAM (in Game Mode, SRAM is automatic using the ROM filename). Modifications made to ROMs in the hex editor or, eventually, the assembler can be saved as new files for ease of development. ROMs and SRAM can also be resized.

The new Emulation menu brings options that were previously known only to the monks of the mountain who study such things. All of the old keyboard commands will continue to function, but now every command available becomes more accessible thanks to being visible on-screen. Step and Step Over are hidden in Game Mode, since they’re not useful there.

The Link sub-menu brings up a list of additional windows, allowing the user to select one to associate as a communication peer. This is where the New Window option from the File menu comes in handy.

All of the settings will actually be accessible from a single window, but the Settings menu has some common options to help the user get to where they need to go without excessive navigation of the menus. Input, Video and Audio all bring up a new Settings window, but they each automatically select the appropriate tab/page. The Settings item simply shows the Settings window in its most recent state.

Additional settings–such as localization/language, disassembler configuration and the eventual native implementation selector–will also be in the Settings window, but simply not have shortcuts in the Settings drop-down menu itself.

The Debug menu should be familiar, as it’s the same as the Window menu up until this point. The entire menu is hidden in Game mode.

__________

While most of the menu items are right-this-second unimplemented, I was still able to get a decent chunk done over the week despite the activity at work…

Anyone familiar with the source code layout will find that App has been broken free of its connotation with the main application interface, and a new class MainWindow was introduced. App functions mainly as a window manager, but also houses the global configuration accessible through the Settings menu.

The code that drives localization of on-screen control messages has also been updated to add some more flexibility that wasn’t there before. The new features will benefit messages that require substitutions, such as the main window title and the Breakpoints window error message. With the new improvements, 100% of the strings are now fully localized and can be swapped out with a new localization file at runtime. An associate of mine has agreed to do a Japanese translation when the time comes, so it won’t just be US English through the end of time.

What I’m currently working on involves input from the user. All of the keyboard bindings are about to be user-configurable, which includes things like “F5 to run/pause” and “D is the B Button”. It’s being set up to eventually support controller/joystick input as well, although that will require native modules to pull off.

The audio output from the VSU is a DC signal that gets converted to analog and attenuated with the volume wheel. All of that processing takes place on a small board mounted next to one of the displays, so tapping into the digital line shouldn’t be too problematic.

Having said that, the audio quality is the same on the analog side of things because the only processing is an RC circuit that applies an inaudible ~7.234 Hz high-pass filter, followed by the attenuation from the volume wheel. The audio signal in Virtual Boy isn’t irreversibly transformed on output like it is on NES and some other systems.

This beast keeps getting closer and closer to completion. Another update, another attached build:

New Features

• Bit string instructions are now implemented

• The wait controller is now implemented

• Illegal instructions now display in the disassembler as [font=Courier New]—[/font]

• Added disassembler comments for remaining hardware component registers

Code Changes

• Researched and implemented correct timing for DPBSY and SBOUT

• Breakpoints interface moved into Debugger package where it belongs

• Deleting a breakpoint will now select the next available breakpoint in the list

• Tweaked timing code to always refresh between frames

• Tweaked read and write CPU cycle counts

• The Console window now displays images with a one-frame delay, as the hardware does, in order to facilitate slower software rendering

Bug Fixes

• Interrupt handling now correctly sets interrupt masking level

• Corrected a read bit in SCR that prevented Mario Clash from reading input

• Writing into frame buffer memory will now be visible in the Console window

• Corrected disassembler treating illegal opcodes as bit strings

• Clicking in the list of breakpoints now commits any breakpoint edits

• Disassembler comments for interrupts should now appear even without a breakpoint

• Logical Not ([font=Courier New]![/font] operator) in Condition and Goto now works properly when used on boolean and integer values

An edit to the number of CPU cycles taken by bus accesses seems to have improved performance across the board. Apparently Java wasn’t the problem so much as the simulated CPU was too slow, and now most games run full-speed on my workstation. Waterworld, which was previously so choppy as to be unplayable, now runs smoothly, and the mysterious flickering in Mario’s Tennis has stopped as well.

Red Alarm does not boot in this build. It seems to be especially sensitive to system timings, and when I do get it to run, some frames exhibit errors like blank contents or failure to clear the previous frame. It will take some research, as it does depend on WRAM and VRAM bus access latency, so until further notice the emulator will not have 100% compatibility with commercial games.

The sacred tech scroll now documents the display timings, the hardware component memory map, further details on bit string instructions and even the communication port. I wasn’t able to have link support ready to go in time for this update, so I guess you know what to expect next time. (-:

Attachments:

Did some more experiments today, which came with an update to Communication Workshop, attached to this post. The Sacred Tech Scroll has been updated with my findings.

1) When initiating a communication with the internal clock selection, it will immediately process without a peer and load [font=Courier New]0xFF[/font] into CDRR.

This appears to be true when another system is linked and powered on, but with it powered off or disconnected, CDRR gets garbage bits. This is unfortunate, as it makes it more difficult to automatically negotiate which unit gets to host the clock signal.

5) Does the C-Int-Inh or CC-Int-Inh bit corresponding with the source of the interrupt need to be set in order to acknowledge the interrupt? The literature says CC-Int-Inh works for both, but I haven’t tested it.

There are two distinct sources for communication interrupts: one configured via CCR and the other through CCSR. If either register’s condition triggers an interrupt, that register’s Int-Inh bit must be set to acknowledge it.

This can be tested with the new Communication Workshop. If you store [font=Courier New]0x01[/font] into CDTR, it will unconditionally attempt to acknowledge any interrupt with a write to C-Int-Inh. Similarly, [font=Courier New]0x02[/font] makes it try CC-Int-Inh. If any other value is in CDTR, interrupts will be acknowledged automatically.

The text next to the IRQ field in the output describes what happened. It will say either “CCR” or “CCSR” if a single register was needed, “Both” if both registers qualified, or “Twice” if the first attempt specified by CDTR failed and the interrupt handler was called a second time.

7) I haven’t yet tested the behaviors of CC-Rd or CC-Smp with only one unit or without a pending communication on a remote unit. It’s getting late and I’m tired. I’ll do it tomorrow!

If there is no connected unit or the connected unit is powered off, CCSR automatically behaves as though a phantom second unit has the same configuration in that register.

8) Will changing C-Clk-Sel while C-Stat is set abort the current communication? I’d hate to think that the link component could soft-lock itself on both units by waiting for an external master unit that will never come…

A communication initiated with C-Start cannot be canceled, but changing C-Clk-Sel from External to Internal will cause the normal internal behavior to carry out, even if you don’t write to C-Start.

Attachments:

This is some fantastic work. If you’re desperate for customers hit me up, but I’d be happy to wait for upgrades to the design once it takes hold.